Lang Chain’s Align Evals closes the evaluator trust gap with prompt-level calibration

As organizations increasingly adopt AI models to ensure their applications' effectiveness and reliability, the discrepancies between model evaluations and human assessments have become more pronounced. To address this challenge, Lang Chain has introduced Align Evals, a new feature within Lang Smith, designed to connect large language model (LLM)-based evaluators with human preferences and minimize inconsistencies. Align Evals empowers users of Lang Smith to create their own LLM-based evaluators, allowing for calibration that aligns more closely with specific company standards. According to Lang Chain, a common issue reported by teams is the misalignment between their evaluation scores and what human evaluators would expect. This inconsistency leads to confusing comparisons and wasted efforts in pursuing misleading signals. Lang Chain stands out as one of the few platforms that integrates LLM-based evaluations directly into its testing dashboard. The development of Align Evals was inspired by a study from Eugene Yan, a principal applied scientist at Amazon, which proposed a system to automate aspects of the evaluation process. With Align Evals, businesses can refine their evaluation prompts, compare alignment scores generated by human evaluators with those from LLMs, and establish baseline alignment scores. The company emphasizes that Align Evals represents a crucial step in enhancing evaluator quality. In the future, Lang Chain aims to incorporate analytics that will monitor performance and automate the optimization of prompts, generating variations automatically. Initially, users will need to define the evaluation criteria pertinent to their applications—such as accuracy for chat apps—and select data for human review, ensuring a balanced representation of both positive and negative examples. Following this, developers will assign scores to prompts or task objectives that will act as benchmarks. The goal is to streamline the creation of LLM-as-a-Judge evaluators, making the process more accessible. Users will then generate an initial prompt for the model evaluator and refine it based on feedback from human graders. If the model tends to overestimate certain responses, for instance, adding clearer negative criteria can enhance accuracy. As enterprises increasingly rely on evaluation frameworks to gauge the reliability, behavior, and auditability of AI systems, having a transparent scoring system allows organizations to confidently deploy AI applications and facilitates comparisons among various models. Major companies like Salesforce and AWS have begun to provide tools for performance evaluation. Salesforce’s Agentforce 3 features a command center to monitor agent performance, while AWS offers both human and automated assessments on its Amazon Bedrock platform. The demand for more customized evaluation methods is pushing platforms to develop integrated solutions for model evaluations. As more developers and businesses seek effective evaluation tools for LLM workflows, innovations like Align Evals are precisely what the ecosystem needs to enhance AI validation processes.

Apple Unveils AI-Driven Adaptive Power Feature in iOS 26 Update

Apple fans can breathe a sigh of relief as the tech giant has officially launched the iOS 26 update, which is now availa...

Mint | Sep 16, 2025, 16:45

Qualcomm Unveils Snapdragon 8 Elite Gen 5: A Leap Forward for Android Flagships

Qualcomm has officially announced the name of its latest flagship mobile processor, the Snapdragon 8 Elite Gen 5, which ...

Mint | Sep 16, 2025, 17:45

OpenAI Introduces Age-Detection Features for Safer ChatGPT Use Among Minors

In a significant move to enhance user safety, OpenAI's CEO Sam Altman announced the development of an age-detection syst...

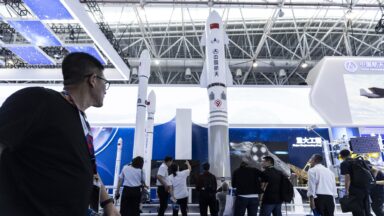

Business Insider | Sep 16, 2025, 15:30China's Space Ambitions Surge: A Growing Challenge for U.S. Dominance

As Jonathan Roll approached the finish line of his master’s degree in science and technology policy at Arizona State Uni...

Ars Technica | Sep 16, 2025, 16:25

Investigation Launched into Tesla Model Y Door Handle Issues

The National Highway Traffic Safety Administration (NHTSA) has initiated a probe into reports regarding malfunctioning d...

TechCrunch | Sep 16, 2025, 15:20